Скачать с ютуб A Review of 10 Most Popular Activation Functions in Neural Networks в хорошем качестве

Скачать бесплатно и смотреть ютуб-видео без блокировок A Review of 10 Most Popular Activation Functions in Neural Networks в качестве 4к (2к / 1080p)

У нас вы можете посмотреть бесплатно A Review of 10 Most Popular Activation Functions in Neural Networks или скачать в максимальном доступном качестве, которое было загружено на ютуб. Для скачивания выберите вариант из формы ниже:

Загрузить музыку / рингтон A Review of 10 Most Popular Activation Functions in Neural Networks в формате MP3:

Если кнопки скачивания не

загрузились

НАЖМИТЕ ЗДЕСЬ или обновите страницу

Если возникают проблемы со скачиванием, пожалуйста напишите в поддержку по адресу внизу

страницы.

Спасибо за использование сервиса ClipSaver.ru

A Review of 10 Most Popular Activation Functions in Neural Networks

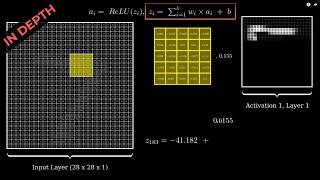

In this video, I'll be discussing 10 different activation functions used in machine learning, providing visualizations of their graphs and explaining the behavior of their derivatives. The list of activation functions covered includes: 1. Linear 2. ReLU 3. Leaky ReLU 4. Sigmoid (also known as the logistic sigmoid function) 5. Tanh (also known as the hyperbolic tangent function) 6. Softplus 7. ELU 8. SELU 9. Swish 10. GELU By the end of the video, you'll learn which activation functions have continuous derivatives and which ones have discontinuous derivatives, as well as which ones are monotonic and which ones are non-monotonic functions. ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ Errata: The output of sigmoid function is between [0, 1].

![Hopfield network: How are memories stored in neural networks? [Nobel Prize in Physics 2024] #SoME2](https://i.ytimg.com/vi/piF6D6CQxUw/mqdefault.jpg)