Скачать с ютуб Model Monitoring: The Million Dollar Problem // Loka Team // MLOps Meetup #87 в хорошем качестве

Скачать бесплатно и смотреть ютуб-видео без блокировок Model Monitoring: The Million Dollar Problem // Loka Team // MLOps Meetup #87 в качестве 4к (2к / 1080p)

У нас вы можете посмотреть бесплатно Model Monitoring: The Million Dollar Problem // Loka Team // MLOps Meetup #87 или скачать в максимальном доступном качестве, которое было загружено на ютуб. Для скачивания выберите вариант из формы ниже:

Загрузить музыку / рингтон Model Monitoring: The Million Dollar Problem // Loka Team // MLOps Meetup #87 в формате MP3:

Если кнопки скачивания не

загрузились

НАЖМИТЕ ЗДЕСЬ или обновите страницу

Если возникают проблемы со скачиванием, пожалуйста напишите в поддержку по адресу внизу

страницы.

Спасибо за использование сервиса ClipSaver.ru

Model Monitoring: The Million Dollar Problem // Loka Team // MLOps Meetup #87

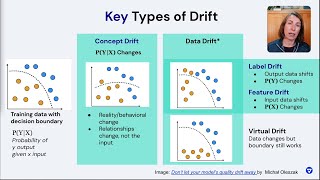

MLOps Community Meetup #87 with Loka Team! //Technical note We started recording a bit late on this one, so there's a transcript of the first few minutes at the bottom of this description. // Abstract Model and data monitoring is a crucial part of a production ML system — a lot can go wrong: model drift, data anomalies, upstream data, or processing failures. And mistakes can be costly if ML drives mission-critical systems like credit approval or fraud detection, easily meaning millions of dollars lost for large organizations. So, why do so few companies have ML monitoring fully implemented? The Loka team works with venture-backed startups and innovation programs in enterprises to implement end-to-end ML systems and brings their diverse experience to this Meetup. In the Meetup, several of Loka’s MLOps experts will provide a thorough overview of model monitoring, the benefits for your business, and reference architectures to get you started. ---------- ✌️Connect With Us ✌️------------ Join our Slack community: https://go.mlops.community/slack Follow us on Twitter: @mlopscommunity Sign up for the next meetup: https://go.mlops.community/register Connect with Demetrios on LinkedIn - : / dpbrinkm Connect with Alexandre on LinkedIn - / ajdomingues Connect with Diogo on LinkedIn: / diogocald… Connect with Nicolás on LinkedIn: / nicolas-r… Connect with Bojan on LinkedIn: / bojanilij. . //Transcript Emily: Welcome everybody today to model monitoring the Million Dollar Problem. We have a great team of ML engineers from Loka, and we're really excited to give you kind of a general overview of the space and some deep dives into a couple of technologies that we've worked with here at Loka. So get started. Why, why the million-dollar problem, right? So Model monitoring is one of those tools that really very few companies have implemented today, which is very surprising because we’re seeing increasing numbers of machine learning systems starting to run in production, starting to power real production systems, like recommendation systems, fraud models, et cetera. And there's an awful lot that can go wrong in these complexities. So just in the work that we do with customers, we see modern monitoring, really helping to catch and prevent problems that could end up costing our customers lots of money. One example I can think of right off the top from a prior life as a customer who had a model that was basically doing credit approvals. These real-time credit approvals were being mediated fully through this online model. They weren't able to identify an upstream data failure. And this ended up resulting in the approval of people with lower credit scores than they normally would approve, which is something obviously that fraudsters can catch on to relatively quickly. And it was a really catastrophic loss of money in a short period of time for them before this problem was discovered and remediated. These are obviously worst-case scenarios, but even small deviations in customer experience and errors in the system that are not caught can have a major impact. It’s great that so many tools are starting to emerge in the market to help you address those problems. So with that, I'm going to hand it over to Diogo. Diogo: Things never go to plan. You've deployed your model but now what? What will happen to the performance from now on and when you're dealing with people that aren't inside the data science field as we all are? This can be a problem. When you're a boss, your stakeholders or your clients have different expectations from you. Things can really work poorly. When they hear ML or AI, they typically think of something like this (first slide shown on the video). Something that we'll learn over time. As we all know this is really bad and we need to manage their expectations. But we even need to manage our own expectations because let's face it, we all hope Machine Learning would work like this. So it would be a sort of a deterministic system where we're 100% sure that once we deploy it, it will just keep working perfectly forever the same way once it came off the assembly line. But we've got to be realistic and we all know that that ain't gonna happen. Most likely the performance will decrease. It can increase. It can decrease. It can do whatever it wants. We simply don't know and that's why it's so important to keep track of what our models doing and keep monitoring it. // Disclaimer Loka is not responsible for any errors or omissions, or for the results obtained from the use of this information. All information in this podcast is provided "as is", with no guarantee of completeness, accuracy, timeliness, or of the results obtained from the use of this information. The omitted first portion of the podcast is transcribed above.

![Трамп: Америка вернулась! [ русский перевод ] | Речь в Конгрессе: Украина, Марс и Гренландия](https://i.ytimg.com/vi/36GAZTRo6_M/mqdefault.jpg)