KDD2024 - ChatGLM: A Family of Large Models скачать в хорошем качестве

Повторяем попытку...

Скачать видео с ютуб по ссылке или смотреть без блокировок на сайте: KDD2024 - ChatGLM: A Family of Large Models в качестве 4k

У нас вы можете посмотреть бесплатно KDD2024 - ChatGLM: A Family of Large Models или скачать в максимальном доступном качестве, видео которое было загружено на ютуб. Для загрузки выберите вариант из формы ниже:

-

Информация по загрузке:

Скачать mp3 с ютуба отдельным файлом. Бесплатный рингтон KDD2024 - ChatGLM: A Family of Large Models в формате MP3:

Если кнопки скачивания не

загрузились

НАЖМИТЕ ЗДЕСЬ или обновите страницу

Если возникают проблемы со скачиванием видео, пожалуйста напишите в поддержку по адресу внизу

страницы.

Спасибо за использование сервиса ClipSaver.ru

KDD2024 - ChatGLM: A Family of Large Models

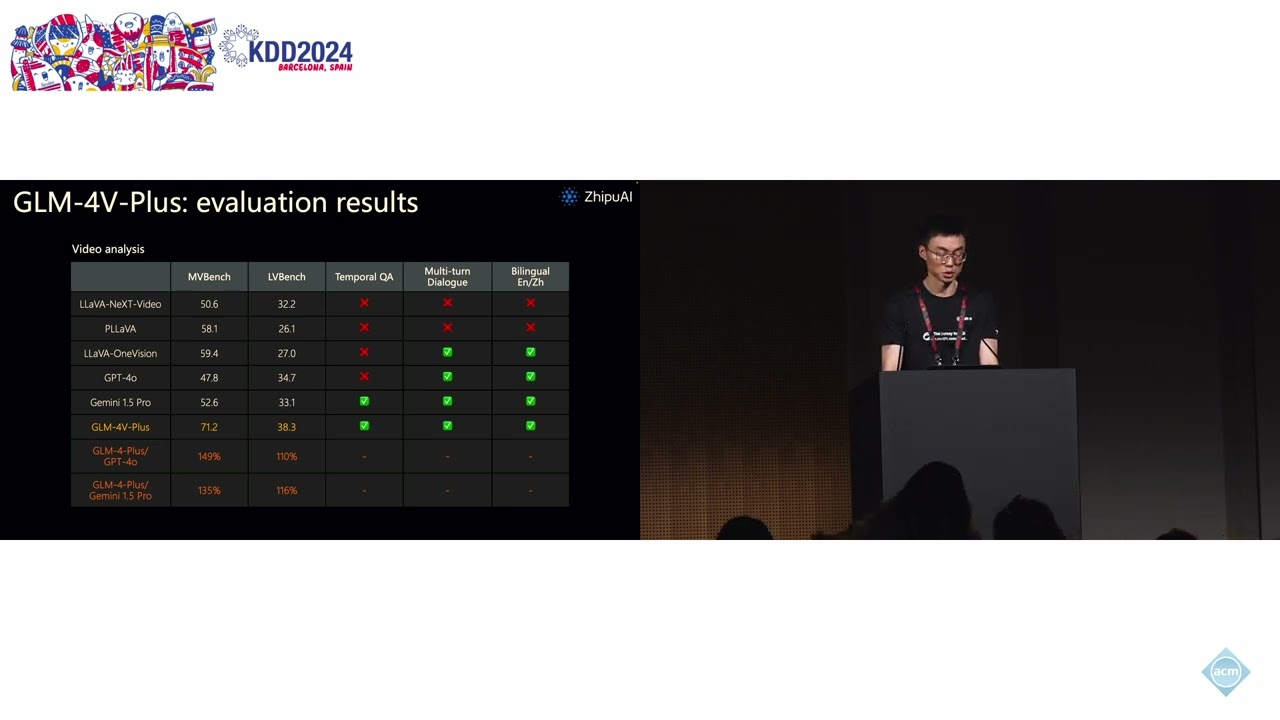

Xiaotao Gu, ZhipuAI Large Language Model Day Keynote Abstract: We introduce ChatGLM, an evolving family of large models we have been developing at Zhipu AI. The family includes our recent language, multi-modal, and agent models. They represent our most capable models trained with all the insights and lessons gained from the preceding three generations of ChatGLM. To date, the GLM-4 models are pre-trained on ten trillion tokens mostly in Chinese and English, along with a small set of corpus from 24 languages, and aligned primarily for Chinese and English usage. The CogVLM, CogView, and CogAgent models have shown competitive results in image understanding, generation, and vision-based agent capabilities. Bio: Xiaotao Gu is currently a researcher in Zhipu AI. He received his Ph.D. degree from University of Illinois at Urbana-Champaign. He was a member of the Knowledge Engineering Group at Tsinghua University. His research interests lie primarily in massive text data mining and large language models. He worked as a research scientist in Huawei. He participated in the development of several systems at Google, ranging from relation extraction, news story headline generation, and the acceleration of language model pre-training.