Finding and Triaging Open Directories with SubCrawl скачать в хорошем качестве

subcrawl

open directories

opendir

malware

threat hunting

cyber

cybersecurity

training

malware analysis

reverse engineering

education

educational

getting started

help

how-to

mitre

mitre attack

mitre defend

cyber security

threat analysis

ida pro

ghidra

remnux

suricata

network traffic

network traffic analysis

career

professional development

cyber training

career advancement

prep

security tools

cyber tools

penetration testing

workshops

technical

pcap

Повторяем попытку...

Скачать видео с ютуб по ссылке или смотреть без блокировок на сайте: Finding and Triaging Open Directories with SubCrawl в качестве 4k

У нас вы можете посмотреть бесплатно Finding and Triaging Open Directories with SubCrawl или скачать в максимальном доступном качестве, видео которое было загружено на ютуб. Для загрузки выберите вариант из формы ниже:

-

Информация по загрузке:

Скачать mp3 с ютуба отдельным файлом. Бесплатный рингтон Finding and Triaging Open Directories with SubCrawl в формате MP3:

Если кнопки скачивания не

загрузились

НАЖМИТЕ ЗДЕСЬ или обновите страницу

Если возникают проблемы со скачиванием видео, пожалуйста напишите в поддержку по адресу внизу

страницы.

Спасибо за использование сервиса ClipSaver.ru

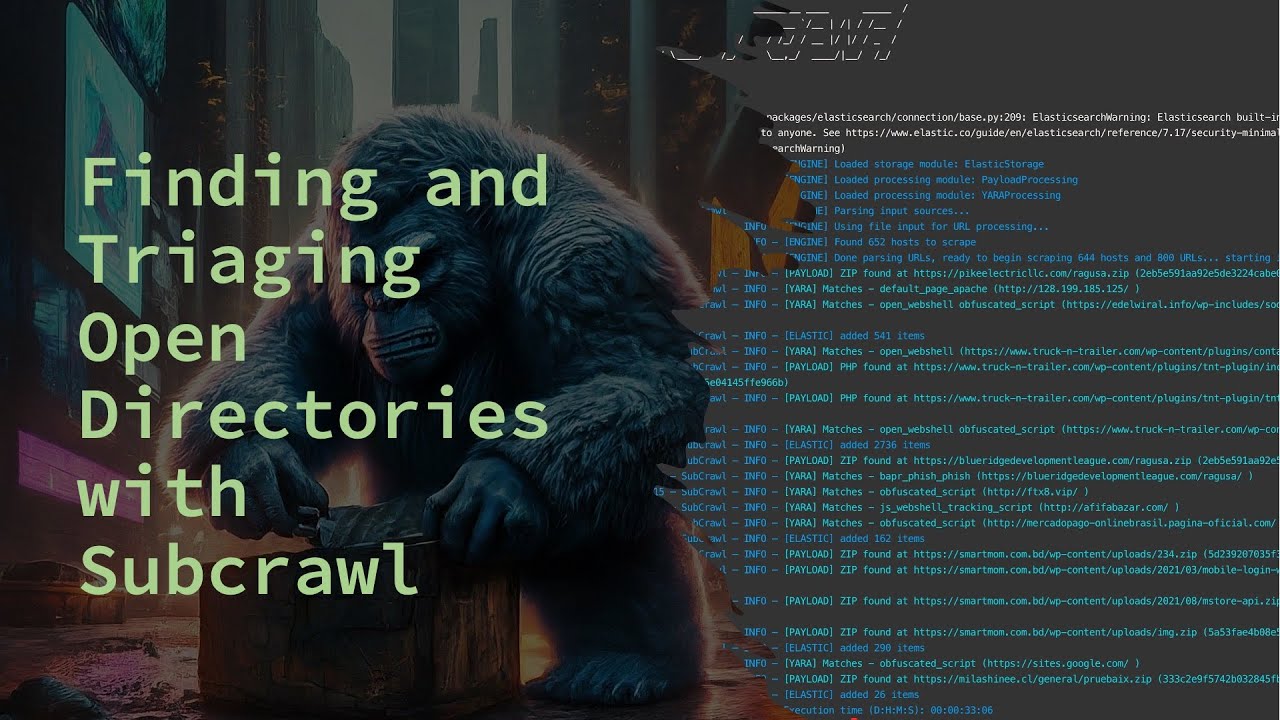

Finding and Triaging Open Directories with SubCrawl

In this video, I'll introduce you to the Subcrawl framework - which was designed to ease the process of finding and triaging content found in open directories. We'll start with a brief background on open directories, then jump right into Subcrawl - where to find it, how to install it, the overall structure and then how to start crawling URLs. This will be the first of several videos, so stay tuned for more content! Cybersecurity, reverse engineering, malware analysis and ethical hacking content! 🎓 Courses on Pluralsight 👉🏻 https://www.pluralsight.com/authors/j... 🌶️ YouTube 👉🏻 Like, Comment & Subscribe! 🙏🏻 Support my work 👉🏻 / joshstroschein 🌎 Follow me 👉🏻 / jstrosch , / joshstroschein ⚙️ Tinker with me on Github 👉🏻 https://github.com/jstrosch A more detailed description of Subcrawl: SubCrawl is a framework developed by Patrick Schläpfer, Josh Stroschein and Alex Holland of HP Inc’s Threat Research team. SubCrawl is designed to find, scan and analyze open directories. The framework is modular, consisting of four components: input modules, processing modules, output modules and the core crawling engine. URLs are the primary input values, which the framework parses and adds to a queuing system before crawling them. The parsing of the URLs is an important first step, as this takes a submitted URL and generates additional URLs to be crawled by removing sub-directories, one at a time until none remain. This process ensures a more complete scan attempt of a web server and can lead to the discovery of additional content. Notably, SubCrawl does not use a brute-force method for discovering URLs. All the content scanned comes from the input URLs, the process of parsing the URL and discovery during crawling. When an open directory is discovered, the crawling engine extracts links from the directory for evaluation. The crawling engine determines if the link is another directory or if it is a file. Directories are added to the crawling queue, while files undergo additional analysis by the processing modules. Results are generated and stored for each scanned URL, such as the SHA256 and fuzzy hashes of the content, if an open directory was found, or matches against YARA rules. Finally, the result data is processed according to one or more output modules, of which there are currently three. The first provides integration with MISP, the second simply prints the data to the console, and the third stores the data in an SQLite database. Since the framework is modular, it is not only easy to configure which input, processing and output modules are desired, but also straightforward to develop new modules.