pytorch batchnorm1d скачать в хорошем качестве

Повторяем попытку...

Скачать видео с ютуб по ссылке или смотреть без блокировок на сайте: pytorch batchnorm1d в качестве 4k

У нас вы можете посмотреть бесплатно pytorch batchnorm1d или скачать в максимальном доступном качестве, видео которое было загружено на ютуб. Для загрузки выберите вариант из формы ниже:

-

Информация по загрузке:

Скачать mp3 с ютуба отдельным файлом. Бесплатный рингтон pytorch batchnorm1d в формате MP3:

Если кнопки скачивания не

загрузились

НАЖМИТЕ ЗДЕСЬ или обновите страницу

Если возникают проблемы со скачиванием видео, пожалуйста напишите в поддержку по адресу внизу

страницы.

Спасибо за использование сервиса ClipSaver.ru

pytorch batchnorm1d

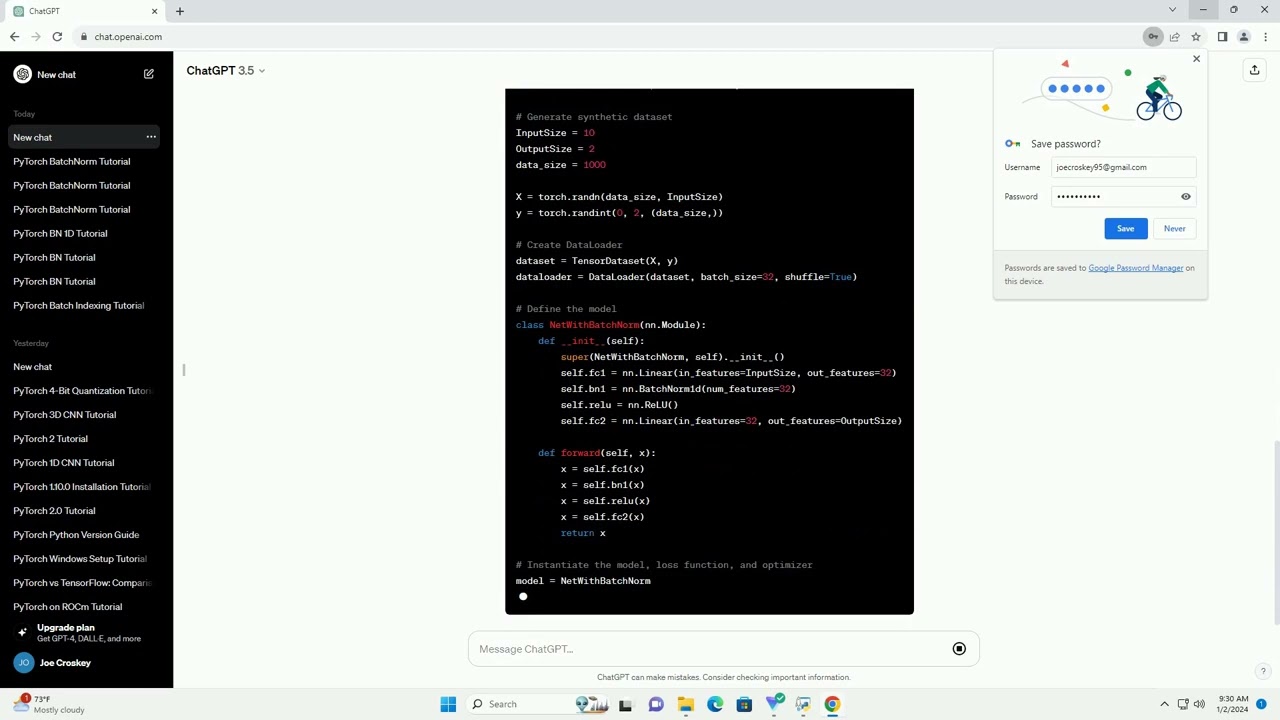

Download this code from https://codegive.com Batch Normalization is a technique used to improve the training of deep neural networks by normalizing the input of each layer. It helps stabilize and accelerate the training process. In PyTorch, the BatchNorm1d module is used for batch normalization in 1D data, which is commonly applied to fully connected layers. In this tutorial, we'll cover the following topics: Batch Normalization normalizes the input of each layer by subtracting the mean and dividing by the standard deviation of the mini-batch. This helps in mitigating issues like vanishing or exploding gradients, and it acts as a form of regularization. The BatchNorm1d module in PyTorch is specifically designed for 1D data, making it suitable for fully connected layers. The BatchNorm1d module can be easily integrated into your neural network architecture. It takes as input the number of features in your data and has optional parameters like eps (a small constant to avoid division by zero) and momentum (for running average calculations during training). Here is the basic syntax: Now, let's create a simple neural network using BatchNorm1d with PyTorch. We'll use a synthetic dataset for demonstration purposes. This example demonstrates how to use BatchNorm1d in a simple neural network for a classification task. You can customize the model architecture, dataset, and training parameters based on your specific use case. ChatGPT