Handling Distribution Shift in Visual Learning скачать в хорошем качестве

Повторяем попытку...

Скачать видео с ютуб по ссылке или смотреть без блокировок на сайте: Handling Distribution Shift in Visual Learning в качестве 4k

У нас вы можете посмотреть бесплатно Handling Distribution Shift in Visual Learning или скачать в максимальном доступном качестве, видео которое было загружено на ютуб. Для загрузки выберите вариант из формы ниже:

-

Информация по загрузке:

Скачать mp3 с ютуба отдельным файлом. Бесплатный рингтон Handling Distribution Shift in Visual Learning в формате MP3:

Если кнопки скачивания не

загрузились

НАЖМИТЕ ЗДЕСЬ или обновите страницу

Если возникают проблемы со скачиванием видео, пожалуйста напишите в поддержку по адресу внизу

страницы.

Спасибо за использование сервиса ClipSaver.ru

Handling Distribution Shift in Visual Learning

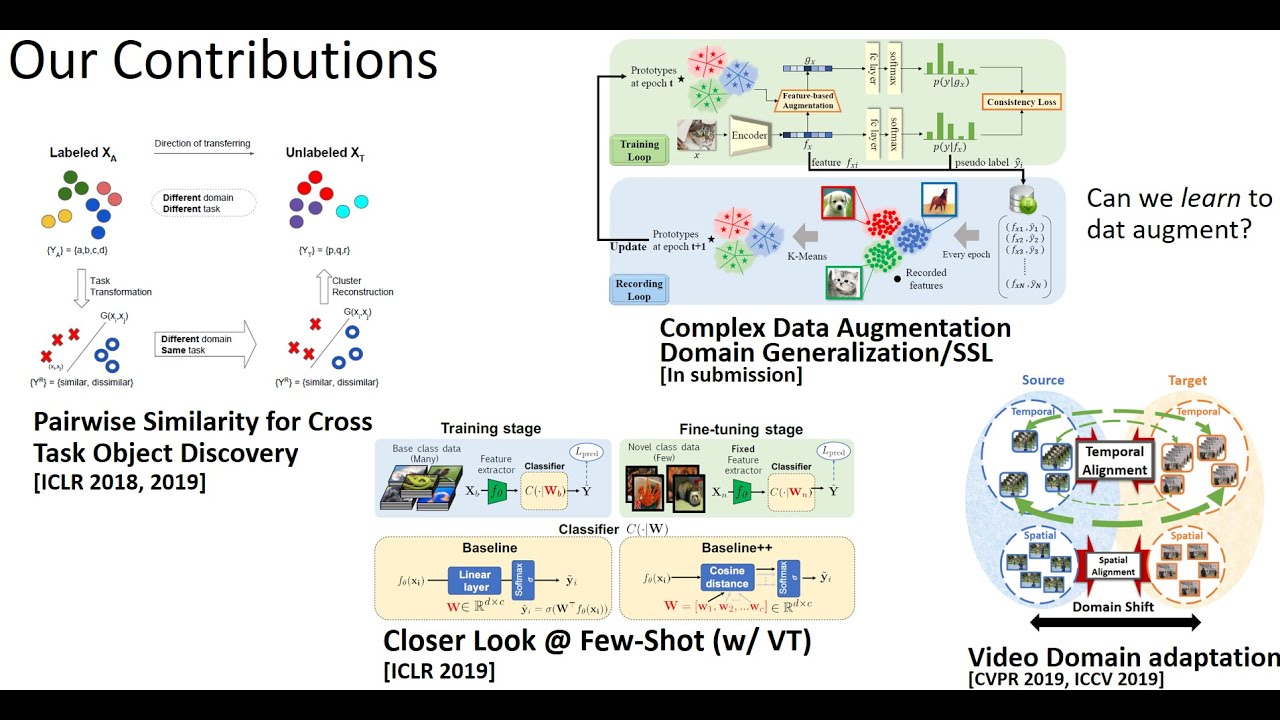

Abstract: While deep learning has achieved remarkable computer vision successes, fundamentally both the theory and practice for these successes have relied on vanilla supervised learning where the training and testing datasets both are sampled from the same distribution. In reality, there is likely to be a significant distribution shift once models are deployed, including noise/weather/illumination/modality changes (covariate shift), new categories (semantic shift), or different label distributions. In this talk, I will present our recent work focusing on the fundamental handling of several of these shifts. For label distribution shifts, we propose a posterior-recalibration of classifiers that can be applied without re-training to handle imbalanced datasets. For covariate and semantic shift, we propose a geometric decoupling of classifiers into feature norms and angles, showing that it can be used to learn more sensitive feature spaces for better calibration and out-of-distribution detection. We demonstrate state-of-art results across multiple benchmark datasets and metrics. In the end, I will present connections to a wider set of problems including continual/lifelong learning, open-set discovery, and semi-supervised learning.

![[CVPR'23 WAD] Keynote - Chelsea Finn, Stanford University/Google](https://imager.clipsaver.ru/3ujBJkuRbhg/max.jpg)

![3. CS50 на русском: Лекция #3 [Гарвард, Основы программирования, осень 2015 год]](https://imager.clipsaver.ru/agdXnmEadCM/max.jpg)

![1. CS50 на русском: Лекция #1 [Гарвард, Основы программирования, осень 2015 год]](https://imager.clipsaver.ru/SW_UCzFO7X0/max.jpg)